Please meet with your group members and get started on the lab as soon as possible. In general, in this class you will have about 2 weeks from the lab release date until the due date.

I have created a Git repository for each group. You should have already received an invite to access your repository on LoboGit. If you did not receive such an invite, contact me immediately.

The name of your repository will be teamXX, where XX are digits. You should be able to view the repository online at https://lobogit.unm.edu/net-f18/lab2/teamXX.

You will use the git tool on Linux to access the repository.

Cloning the Repository via HTTPS (Easy)

This is the easiest method of accessing your repository. Git will prompt you for your UNM NetID/password.

cd ~

mkdir -p net-f18

cd net-f18

git clone https://lobogit.unm.edu/net-f18/lab2/teamXX.git lab2

cd lab2

Here, you should be able to type "ls" (list files) and see the skeleton structure which your lab 2 submission should follow.

Cloning the Repository via SSH (More Difficult)

If you don't wish to type your NetID/password, you can instead use public-key authentication. First (if you haven't already done so), you need to create a key pair for public-key authentication (git will use this instead of username/password for authentication).

mkdir -p ~/.ssh

chmod 700 ~/.ssh

cd ~/.ssh

ssh-keygen -t rsa -b 4096 -C "YOUR_ADDRESS@unm.edu" -f id_rsa

(where YOUR_ADDRESS@unm.edu is your UNM email address). This creates a public key (id_rsa.pub) and a private key (id_rsa). KEEP YOUR PRIVATE KEY SECURE AT ALL TIMES! Open the public key (id_rsa.pub) in a text editor

pluma ~/.ssh/id_rsa.pub

and copy the contents into the "SSH Keys" section of your "Settings" on LoboGit. Once the public key is added on LoboGit, you can do

cd ~

mkdir -p net-f18

cd net-f18

git clone git@lobogit.unm.edu:net-f18/lab2/teamXX.git lab2

cd lab2

Here, you should be able to type "ls" (list files) and see the skeleton structure which your lab 2 submission should follow.

For this lab, you will be given a virtual machine (VM) with sudo permissions, i.e., you will have administrative privileges (unlike on the CS lab machines). This will give you greater freedom in experimenting with the machine's protocol stack.

BE CAREFUL when using sudo on your machine! With great power comes great responsibility!

Your VM can only be reached via one of our classroom machines. If you are not in the classroom, you can access the machines remotely:

ssh -X username@b146-xx.cs.unm.edu

(where username is your CS username, and xx is between 01 and 76).

You will need at least two terminals open on the classroom machine, so either open a new terminal, or start a second SSH connection.

In one terminal, type the following commands

cd /b146vpn

openvpn b146fw-udp-1194.ovpn

and enter your CS username/password when prompted. This authenticates you with the virtual private network (VPN) needed to access your VM.

NOTE: if you get an error about the address already being in use, that means the VPN client is already running! In this case, just skip the VPN step and continue with the following instructions.

In the other open terminal, you should be able to log into your VM

ssh -X username@10.200.2.yyy

NOTE: Replace username with the username you've been sent via email (it

should be the same as your NetID username).

The 10.200.2.yyy is a unique IP address for your VM, which you will be sent via email.

DO NOT try to log into a VM until you are assigned a username and IP address via email!

Your VM password is initially set PassXXXXXX!, where XXXXXX is the unique

six-digit ID you were assigned via email at the beginning of the class.

The first time you log in, you will be asked to change your password.

When asked for "Current UNIX password", repeat the PassXXXXXX! password,

and then you'll be asked to type a new password twice.

The new password can be anything you want (this VM account is not connected

to your UNM account, or your CS account -- the VM will only be used in this

class).

Once you are logged into your VM, install some utilities:

sudo apt-get install mininet xterm pcapfix python-dpkt python-numpy python-cairo make gnuplot texlive-latex-extra mupdf xpdf evince graphviz iperf3 bridge-utils

sudo sed "s/disable_lua = false/disable_lua = true/g" -i /usr/share/wireshark/init.lua

sudo sed "s/stp_enable=true' %/stp_enable=true' #%/g" -i /usr/lib/python2.7/dist-packages/mininet/node.py

mkdir -p ~/git && cd ~/git

git clone https://github.com/hgn/captcp.git

cd captcp

sudo make install

cd ~

This part of the lab is about learning to use a variety of useful networking-related tools. You will be given a virtual machine (VM) with sudo permissions, which will allow you to run the Mininet network emulator and the Wireshark packet-capture tool.

You can run Mininet as follows:

sudo mn --switch=ovsbr --controller=none --topo single,5

The above command should start Mininet with a simple topology: five hosts (h1 with IP address 10.0.0.1, h2 with 10.0.0.2, etc.) connected to a single switch. Mininet gives a prompt when it starts up, so you can type:

pingall

h1 ping h2

to make sure the hosts can communicate properly (type CTRL-C to stop the ping). Then you can type:

xterm h1 h2

to get a terminal for the (virtual) hosts. These terminals allow you to run commands on the virtual hosts, just as you would run them on the physical machine. For example, you can run an iperf client/server on h1/h2 to test the throughput.

In the h1 xterm, type the following to start an iperf server:

iperf -s

Now, in the h2 xterm, type the following to start an iperf client:

iperf -c 10.0.0.1

To exit Mininet:

exit

At times, Mininet fails to exit cleanly, leaving some components in an unexpected state. You can "clean up after" Mininet by running

sudo mn -c

You can use tcpdump to capture packets:

sudo tcpdump -i any "host 10.0.0.1" -w out_file.pcap

(the quoted string is a filter which matches on packets from/to address 10.0.0.1). This produces a PCAP file which contains a packet trace.

Similarly, you can use wireshark to capture packets:

sudo wireshark -k -i any -f "host 10.0.0.1"

Wireshark is a very powerful graphical program which allows you to inspect the captured packets. There are menu items which let you save the packet trace to a PCAP file, graph the throughput, etc.

Wireshark can also open a PCAP file for viewing:

wireshark file.pcap

Task A - Start Mininet as mentioned above, start capturing packets using tcpdump as described above, and start a netcat client/server pair on hosts h1 and h2. Send a line of text from the client to the server, and then exit netcat. Open the resulting packet trace in Wireshark, and briefly describe in your README file how the sequence of packets corresponds to the TCP protocol. Make sure to include the PCAP file in your submission.

Task B - Use Wireshark to create an image showing the sequence of messages being exchanged back and forth between the client and server.

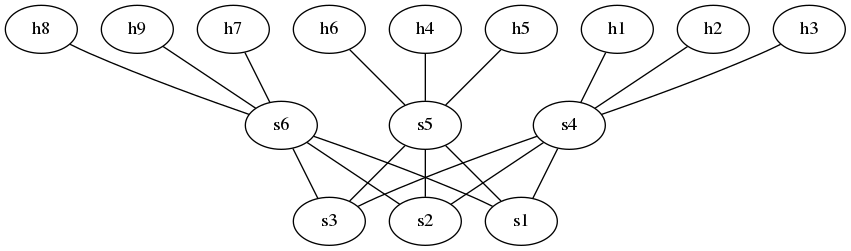

Task C - Write a program (in either C/C++, Java, or Python) which reads a DOT file

and outputs a custom Mininet startup script which initializes Mininet with the topology

in the DOT file.

You can assume the DOT file looks similar to the following: example.dot.

In particular, host nodes will be called hX where X is a number, and switch nodes

will be called sY where Y is a number.

You can also assume that both the switch and host numbers are sequential, starting from 1,

i.e., the hosts are h1, h2, ..., and the switches are s1, s2, ....

Given a DOT file such as example.dot, you can produce a PDF visualizing the graph as follows:

dot -Tpdf -o example.pdf example.dot

The following is an example Mininet startup script with a simple 3-switch

ring topology.

Save the following example Mininet startup script as emptynet.py:

#!/usr/bin/python

"""

This example shows how to create an empty Mininet object

(without a topology object) and add nodes to it manually.

"""

from mininet.net import Mininet

from mininet.node import Controller, OVSBridge

from mininet.nodelib import LinuxBridge

from mininet.cli import CLI

from mininet.log import setLogLevel, info

class MyBridge(OVSBridge):

"Custom OVSBridge."

def __init__(self, *args, **kwargs):

kwargs.update(stp='True')

OVSBridge.__init__(self, *args, **kwargs)

# NOTE - to use LinuxBridge instead of OVSBridge, you must install:

# sudo apt-get install bridge-utils

class MyBridge2(LinuxBridge):

"Custom LinuxBridge."

def __init__(self, name, stp=True, prio=None, **kwargs):

LinuxBridge.__init__(self, name, stp, prio, **kwargs)

def emptyNet():

"Create an empty network and add nodes to it."

setLogLevel("info")

#setLogLevel("debug")

net = Mininet( controller=None, switch=MyBridge )

#info( '*** Adding controller\n' )

#net.addController( 'c0' )

info( '*** Adding hosts\n' )

h1 = net.addHost( 'h1', ip='10.0.0.1' )

h2 = net.addHost( 'h2', ip='10.0.0.2' )

h3 = net.addHost( 'h3', ip='10.0.0.3' )

info( '*** Adding switch\n' )

s1 = net.addSwitch( 's1' )

s2 = net.addSwitch( 's2' )

s3 = net.addSwitch( 's3' )

info( '*** Creating links\n' )

net.addLink( h1, s1 )

net.addLink( h2, s2 )

net.addLink( h3, s3 )

net.addLink( s1, s2 )

net.addLink( s2, s3 )

net.addLink( s3, s1 )

info( '*** Starting network\n')

net.start()

#net.staticArp()

net.waitConnected() # https://github.com/mininet/mininet/wiki/FAQ

info( '*** Running CLI\n' )

CLI( net )

info( '*** Stopping network' )

net.stop()

if __name__ == '__main__':

emptyNet()To run Mininet using this startup script, do:

sudo ./emptynet.py

This part of the lab is about investigating the effects of congestion in networks. Again, start Mininet, this time with two hosts:

sudo mn --switch=ovsbr --controller=none --topo single,2

For this part of the lab, modify your client from Lab 1 (or, use the ones I have provided) to measure flow-completion time (FCT), i.e., the amount of

time from the beginning of the HTTP request until the end of the resulting HTML page is received.

First, start a single server on h2, and request a large file using your client on h1, and write down the

FCT. Make sure the file transfer lasts 10-30 seconds (if needed, run your client in a loop, requesting the file multiple times). Note that the client/server I have provided has command-line options which allow you to request

a random file of a given size, and/or perform the same request a given number of times. Additionally, the

client I have provided prints out the FCT for each request (when the verbose option is enabled).

Next, start two servers on h2, and simultaneously request a large file from each, using two clients on h1.

Note that each server you start will need to use a different port (start with 8080 and work your way up).

Again, write down the FCT. Repeat this process, increasing the number of simultaneous connections, until you

have 3-5 measurements for the FCT. You should be able to see average flow-completion time increasing as

you increase the number of simultaneous connections, due to congestion on the single route between

the hosts.

Task A - Use Gnuplot to make a plot of congestion versus number of simultaneous connections.

Task B - Next, use the Linux Traffic Control functionality (see also the HOWTO) to simulate loss and packet-corruption in the network. At the Mininet prompt, type:

s1 tc qdisc replace dev s1-eth1 root netem

s1 tc qdisc change dev s1-eth1 root netem loss 25%

and run iperf. Do this for several different values for the loss percentage, and make a plot of the iperf throughput versus loss.

Task C - You can simulate packet-corruption using a similar mechanism:

s1 tc qdisc replace dev s1-eth1 root netem

s1 tc qdisc change dev s1-eth1 root netem corrupt 25%

make a plot for this case as well.

TCP has utilized various congestion control approaches through the years. Modern Linux operating systems make it easy to experiment with these various approaches. In your VM terminal, type

ls /lib/modules/`uname -r`/kernel/net/ipv4/

to see the list of TCP congestion control modules available. For example, tcp_vegas.ko is the module for the TCP Vegas congestion control approach. To start the module, you can type

sudo modprobe tcp_vegas

Now, you can list the loaded congestion control modules:

sysctl net.ipv4.tcp_available_congestion_control

and check which module is currently in use:

sysctl net.ipv4.tcp_congestion_control

Task A - Pick three different congestion control approaches (e.g., Reno, Vegas, and Cubic), and briefly describe the differences between these.

Task B - On your VM, change the congestion control approach to the first one you've chosen:

sudo sysctl -w net.ipv4.tcp_congestion_control=reno

Now, start Mininet and use tcpdump to begin capturing packets, and run your client/server for 10-20 seconds. You should now have a pcap file corresponding to the client/server test.

Repeat this process for each of your chosen congestion control modules. Once you have the three pcap files, plot the throughput versus time for all of them, preferably on the same graph if possible, and then the time sequence graph for all of them (again, preferably on the same graph), and briefly describe the results.

NOTE: the mergecap tool is provided along with Wireshark - this allows

you to combine multiple PCAP files into one.

Additionally, the editcap tool allows you to perform various modifications

to a PCAP file (for example, time-shift all entries by a certain amount).

If, for some reason, you encounter a corrupted PCAP file, you may be able to

repair it using pcapfix.

NOTE: there is a dedicated menu item for plotting time sequence graph in Wireshark,

but you can also plot this (any many other types of graphs) using the I/O Graph functionality.

For example, to create the time sequence graph, you could set the Y axis to the average of tcp.seq.

The "display filter" can be something like, e.g., ip.src==10.0.0.1 to select only packets from a

specific source.

NOTE: you can also change the congestion control algorithm for a given application by using the setsockopt function in C.

Clearly document (using source-code comments) all of your work. Also fill in the README.md file (preferably using Markdown syntax) with a brief writeup about the design choices you made in your code.

Providing adequate documentation helps me see that you understand the code you've written.

Task A (Optional) - Install and use TCPTuner, and try to reproduce the graphs in Figure 3 and Figure 4.

Task B - Next, experiment with changing some parameters of an existing congestion control algorithm. The Linux TCP congestion control algorithms are deployed as kernel modules. You can compile, e.g., the BBR TCP congestion control module on your VM.

NOTE: BE CAREFUL when working with kernel modules, especially related to networking! If you try to load a buggy kernel module, you can "crash" the kernel (kernel panic), which will render your system unusable and require a restart. Additionally, even if this does not happen, a buggy networking-related kernel module can kill your connection, if you're logged in via SSH. I would highly recommend that you do any kernel programming on a local VM, e.g., VirtualBox on your personal laptop/PC! This will ensure that you can easily do a full restart in case of problems.

If you understand the risks, and still want to work with kernel modules on your VM, you'll first need to upgrade to the 4.15 kernel:

sudo apt-get install linux-generic-hwe-16.04

This will install the newer kernel, but you'll need to restart your VM to boot using the new kernel:

sudo shutdown -r 0

This will terminate the connection with your VM. Wait about 30-60 seconds while the VM reboots, and then reconnect via SSH.

You can type uname -r to confirm that you're now using the 4.15 kernel.

Now, to build the BBR TCP congestion control module, do the following:

wget https://raw.githubusercontent.com/torvalds/linux/600647d467c6d04b3954b41a6ee1795b5ae00550/net/ipv4/tcp_bbr.c

mv tcp_bbr.c my_tcp_bbr.c

touch Makefile

make -C /lib/modules/`uname -r`/build M=`pwd` modules obj-m=my_tcp_bbr.o

This will produce a kernel module my_tcp_bbr.ko. You can load the module by typing

sudo insmod my_tcp_bbr.ko

To check that the module is loaded, you can list all loaded modules, and look for my_tcp_bbr:

lsmod

To remove the module, type

sudo rmmod my_tcp_bbr

See Task B in Part 3 of the lab for how to make the Linux TCP implementation use your my_tcp_bbr congestion control module.

For this task, pick one or two parameters defined in the BBR algorithm, briefly describe how the parameter(s) affect performance, and then make a plot of how the parameter(s) affect throughput versus time, say while using your client/server similar to in Part 3.

I will automatically grab a snapshot of the master branch of your Lab 2 repository at the deadline.

Make sure you push all of your work before the deadline!

I should be able to type "make" in the main directory, and that should build all of the tools for me. Be sure to set up the Makefile as needed.